Decoding AI Insights: Explainable AI Unveiled in 2024

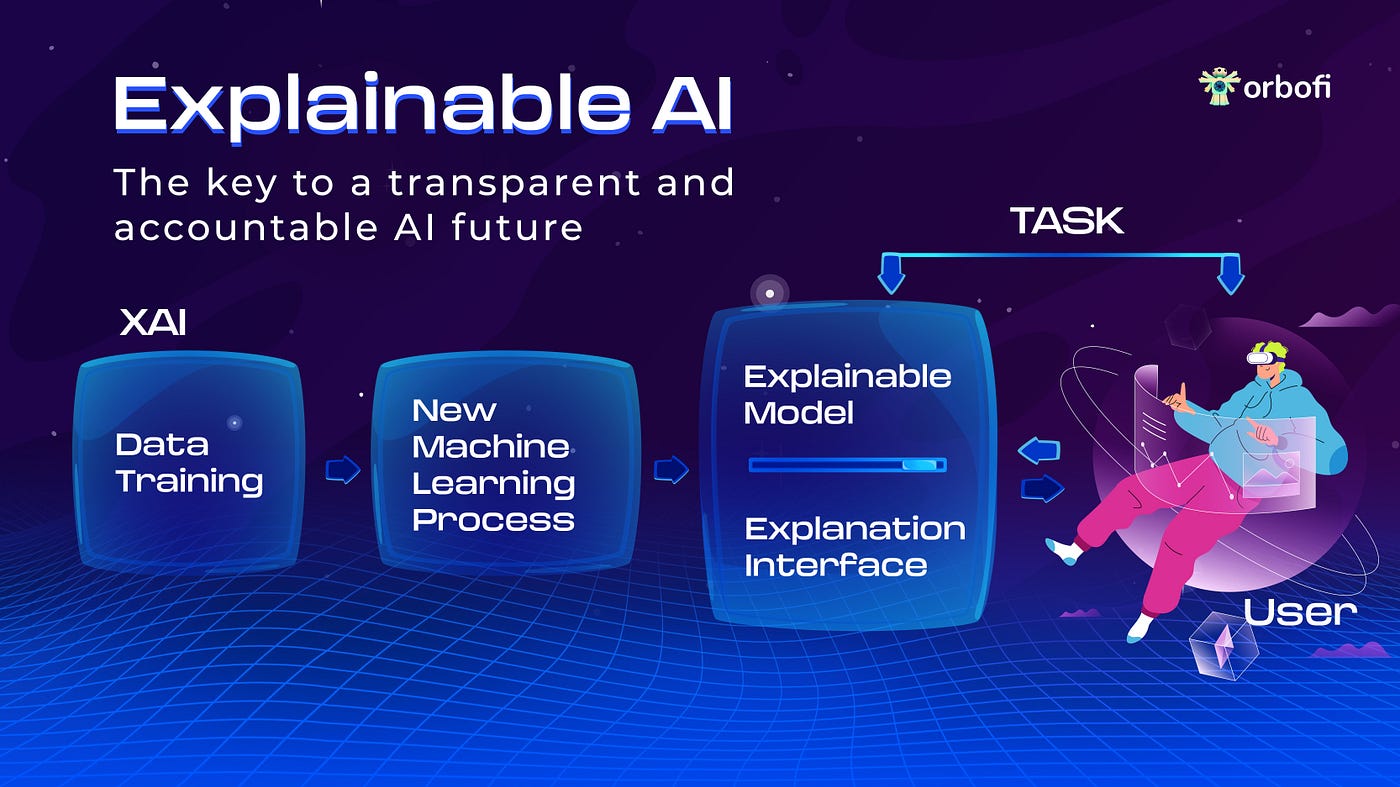

Introduction to Explainable AI

Explainable AI, or XAI, is emerging as a critical component of artificial intelligence systems. In 2024, the importance of understanding and interpreting AI decisions has reached new heights. This article delves into the advancements and implications of Explainable AI in 2024, shedding light on its significance and how it is transforming the AI landscape.

The Need for Transparency in AI

As AI systems become more pervasive in our daily lives, there is a growing need for transparency and accountability. Traditional black-box AI models, where decisions are made without clear explanations, can raise concerns about bias, ethics, and trust. Explainable AI addresses these concerns by providing insights into how AI algorithms arrive at specific decisions.

Advancements in Interpretable Models

In 2024, there have been significant advancements in developing interpretable AI models. These models are designed to produce results that are not only accurate but also understandable to humans. Techniques such as rule-based models, interpretable machine learning algorithms, and attention mechanisms contribute to creating AI systems that can be explained and validated.

Ethical Considerations and Fairness

The ethical implications of AI have become a focal point of discussions. Explainable AI plays a crucial role in addressing these ethical considerations. By providing transparency into the decision-making process, XAI helps identify and mitigate biases in AI models, ensuring fair and equitable outcomes. This is particularly important in applications like hiring, finance, and healthcare.

Applications Across Industries

Explainable AI is finding applications across various industries. In healthcare, for instance, where AI is increasingly used for diagnostics and treatment recommendations, having a clear understanding of AI-generated insights is paramount. Similarly, in finance, interpretable models help in explaining risk assessments and investment recommendations, fostering trust among users and regulators.

User Empowerment and Trust Building

One of the key benefits of Explainable AI is user empowerment. By providing users with understandable explanations of AI decisions, individuals can make informed choices and have confidence in AI-assisted systems. This transparency fosters trust between users and AI applications, paving the way for wider acceptance and adoption of AI technologies.

Challenges in Implementing Explainable AI

While the advancements in Explainable AI are promising, challenges persist. Developing models that balance interpretability with performance remains a complex task. Striking the right balance between accuracy and explainability is an ongoing challenge for AI researchers and practitioners. Additionally, ensuring that explanations are clear and meaningful to non-experts requires ongoing refinement.

Regulatory Landscape and Compliance

The regulatory landscape around AI is evolving, with an increasing focus on ensuring responsible and ethical AI deployment. Explainable AI aligns with these regulatory efforts by providing a framework for compliance. As regulatory bodies establish guidelines for AI transparency, organizations adopting Explainable AI can navigate the evolving legal landscape more effectively.

Educating Stakeholders on XAI

In 2024, there is a growing emphasis on educating stakeholders, including developers, policymakers, and end-users, on the principles and benefits of Explainable AI. This education is crucial for fostering a culture of responsible AI deployment. Training AI practitioners to implement and interpret Explainable AI models ensures that the broader community can harness the benefits of transparent AI systems.

Looking Ahead: Future Trends in Explainable AI

As we navigate through 2024 and beyond, the future trends in Explainable AI are poised for further evolution. The integration of XAI with advanced AI technologies, such as deep learning, and the development of standardized frameworks for explainability are expected to shape the next phase of AI transparency. The collaborative efforts of the AI community will play a pivotal role in defining the trajectory of Explainable AI.

Exploring Explainable AI in 2024

For those seeking a deeper understanding of Explainable AI in 2024, visit Explainable AI in 2024. This comprehensive resource offers insights into the latest developments, real-world applications, and the evolving landscape of Explainable AI as we progress through the digital era.

In conclusion, Explainable AI has emerged as a critical enabler for the responsible and ethical deployment of artificial intelligence. In 2024, the advancements in this field are addressing challenges, fostering transparency, and empowering users. As we embrace the era of AI, the role of Explainable AI becomes increasingly indispensable in shaping a trustworthy and accountable AI ecosystem.